Forecasting with Exogenous Regressors¶

This notebook provides examples of the accepted data structures for passing the expected value of exogenous variables when these are included in the mean. For example, consider an AR(1) with 2 exogenous variables. The mean dynamics are

The \(h\)-step forecast, \(E_{T}[Y_{t+h}]\), depends on the conditional expectation of \(X_{0,T+h}\) and \(X_{1,T+h}\),

where \(E_{T}[Y_{T+h-1}]\) has been recursively computed.

In order to construct forecasts up to some horizon \(h\), it is necessary to pass \(2\times h\) values (\(h\) for each series). If using the features of forecast that allow many forecast to be specified, it necessary to supply \(n \times 2 \times h\) values.

There are two general purpose data structures that can be used for any number of exogenous variables and any number steps ahead:

dict- The values can be pass using adictwhere the keys are the variable names and the values are 2-dimensional arrays. This is the most natural generalization of a pandasDataFrameto 3-dimensions.array- The vales can alternatively be passed as a 3-d NumPyarraywhere dimension 0 tracks the regressor index, dimension 1 is the time period and dimension 2 is the horizon.

When a model contains a single exogenous regressor it is possible to use a 2-d array or DataFrame where dim0 tracks the time period where the forecast is generated and dimension 1 tracks the horizon.

In the special case where a model contains a single regressor and the horizon is 1, then a 1-d array or pandas Series can be used.

[1]:

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

sns.set_style("darkgrid")

plt.rc("figure", figsize=(16, 6))

plt.rc("savefig", dpi=90)

plt.rc("font", family="sans-serif")

plt.rc("font", size=14)

Simulating data¶

Two \(X\) variables are simulated and are assumed to follow independent AR(1) processes. The data is then assumed to follow an ARX(1) with 2 exogenous regressors and GARCH(1,1) errors.

[2]:

from arch.univariate import ARX, GARCH, ZeroMean, arch_model

burn = 250

x_mod = ARX(None, lags=1)

x0 = x_mod.simulate([1, 0.8, 1], nobs=1000 + burn).data

x1 = x_mod.simulate([2.5, 0.5, 1], nobs=1000 + burn).data

resid_mod = ZeroMean(volatility=GARCH())

resids = resid_mod.simulate([0.1, 0.1, 0.8], nobs=1000 + burn).data

phi1 = 0.7

phi0 = 3

y = 10 + resids.copy()

for i in range(1, y.shape[0]):

y[i] = phi0 + phi1 * y[i - 1] + 2 * x0[i] - 2 * x1[i] + resids[i]

x0 = x0.iloc[-1000:]

x1 = x1.iloc[-1000:]

y = y.iloc[-1000:]

y.index = x0.index = x1.index = np.arange(1000)

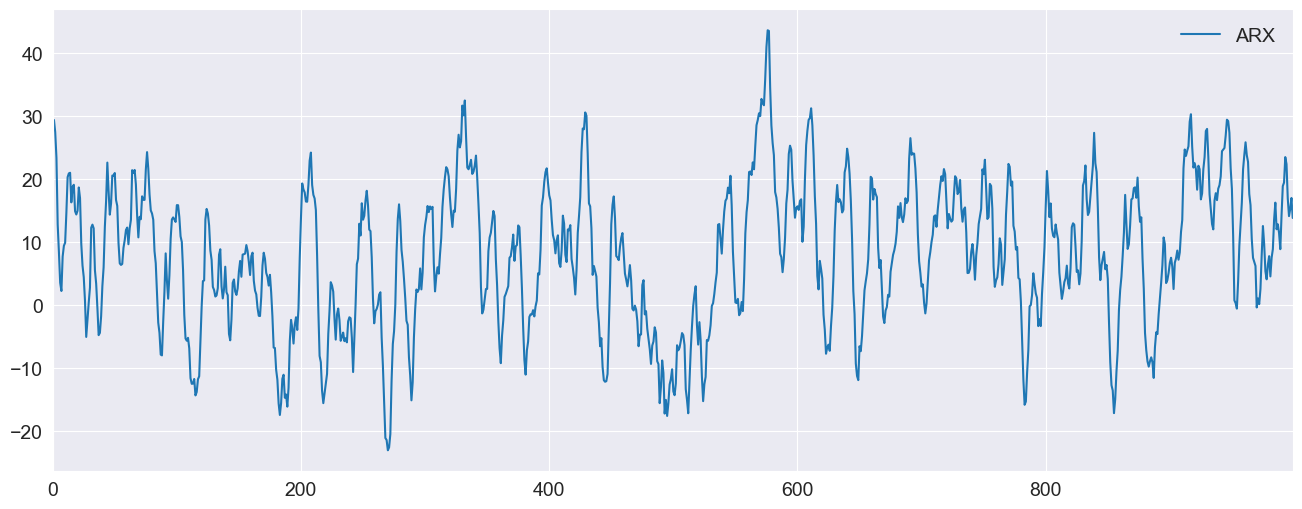

Plotting the data¶

[3]:

ax = pd.DataFrame({"ARX": y}).plot(legend=False)

ax.legend(frameon=False)

_ = ax.set_xlim(0, 999)

Forecasting the X values¶

The forecasts of \(Y\) depend on forecasts of \(X_0\) and \(X_1\). Both of these follow simple AR(1), and so we can construct the forecasts for all time horizons. Note that the value in position [i,j] is the time-i forecast for horizon j+1.

[4]:

x0_oos = np.empty((1000, 10))

x1_oos = np.empty((1000, 10))

for i in range(10):

if i == 0:

last = x0

else:

last = x0_oos[:, i - 1]

x0_oos[:, i] = 1 + 0.8 * last

if i == 0:

last = x1

else:

last = x1_oos[:, i - 1]

x1_oos[:, i] = 2.5 + 0.5 * last

x1_oos[-1]

[4]:

array([5.46067554, 5.23033777, 5.11516888, 5.05758444, 5.02879222,

5.01439611, 5.00719806, 5.00359903, 5.00179951, 5.00089976])

Fitting the model¶

Next, the model is fit. The parameters are precisely estimated.

[5]:

exog = pd.DataFrame({"x0": x0, "x1": x1})

mod = arch_model(y, x=exog, mean="ARX", lags=1)

res = mod.fit(disp="off")

print(res.summary())

AR-X - GARCH Model Results

==============================================================================

Dep. Variable: data R-squared: 0.991

Mean Model: AR-X Adj. R-squared: 0.991

Vol Model: GARCH Log-Likelihood: -1333.33

Distribution: Normal AIC: 2680.66

Method: Maximum Likelihood BIC: 2715.00

No. Observations: 999

Date: Mon, Nov 24 2025 Df Residuals: 995

Time: 04:21:16 Df Model: 4

Mean Model

========================================================================

coef std err t P>|t| 95.0% Conf. Int.

------------------------------------------------------------------------

Const 3.3648 0.161 20.850 1.517e-96 [ 3.048, 3.681]

data[1] 0.7053 3.778e-03 186.699 0.000 [ 0.698, 0.713]

x0 1.9465 2.224e-02 87.536 0.000 [ 1.903, 1.990]

x1 -2.0278 2.531e-02 -80.123 0.000 [ -2.077, -1.978]

Volatility Model

==========================================================================

coef std err t P>|t| 95.0% Conf. Int.

--------------------------------------------------------------------------

omega 0.1120 3.989e-02 2.807 5.006e-03 [3.378e-02, 0.190]

alpha[1] 0.0772 2.646e-02 2.917 3.540e-03 [2.531e-02, 0.129]

beta[1] 0.7931 5.720e-02 13.865 1.028e-43 [ 0.681, 0.905]

==========================================================================

Covariance estimator: robust

Using a dict¶

The first approach uses a dict to pass the two variables. The key consideration here is the the keys of the dictionary must exactly match the variable names (x0 and x1 here). The dictionary here contains only the final row of the forecast values since forecast will only make forecasts beginning from the final in-sample observation by default.

Using DataFrame¶

While these examples make use of NumPy arrays, these can be DataFrames. This allows the index to be used to track the forecast origination point, which can be a helpful device.

[6]:

exog_fcast = {"x0": x0_oos[-1:], "x1": x1_oos[-1:]}

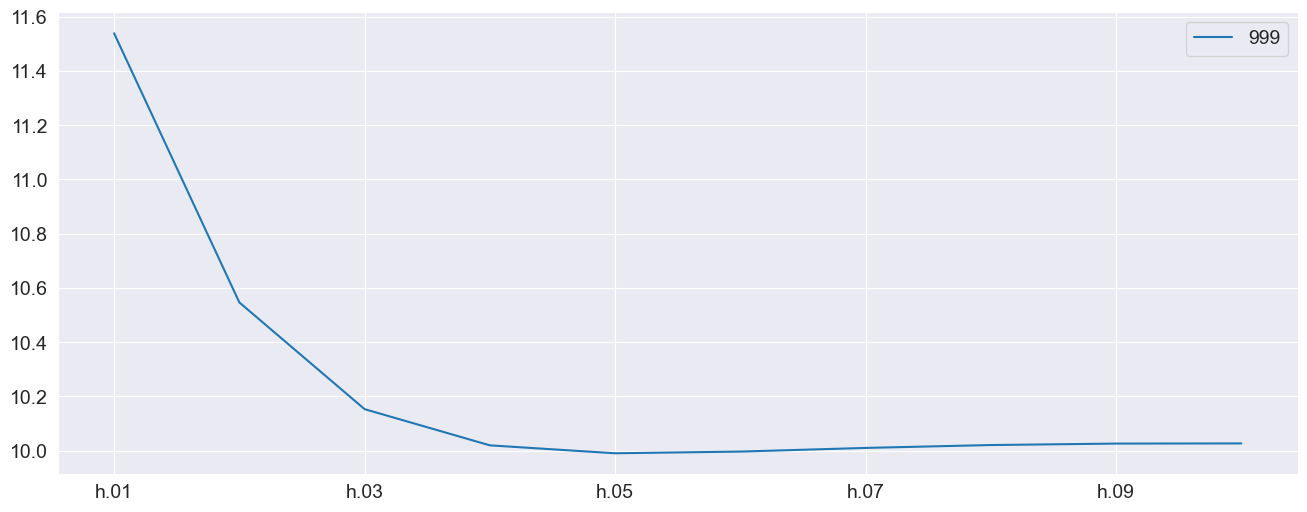

forecasts = res.forecast(horizon=10, x=exog_fcast)

forecasts.mean.T.plot()

[6]:

<Axes: >

Using an array¶

An array can alternatively be used. This frees the restriction on matching the variable names although the order must match instead. The forecast values are 2 (variables) by 1 (forecast) by 10 (horizon).

[7]:

exog_fcast = np.array([x0_oos[-1:], x1_oos[-1:]])

print(f"The shape is {exog_fcast.shape}")

array_forecasts = res.forecast(horizon=10, x=exog_fcast)

print(array_forecasts.mean - forecasts.mean)

The shape is (2, 1, 10)

h.01 h.02 h.03 h.04 h.05 h.06 h.07 h.08 h.09 h.10

999 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

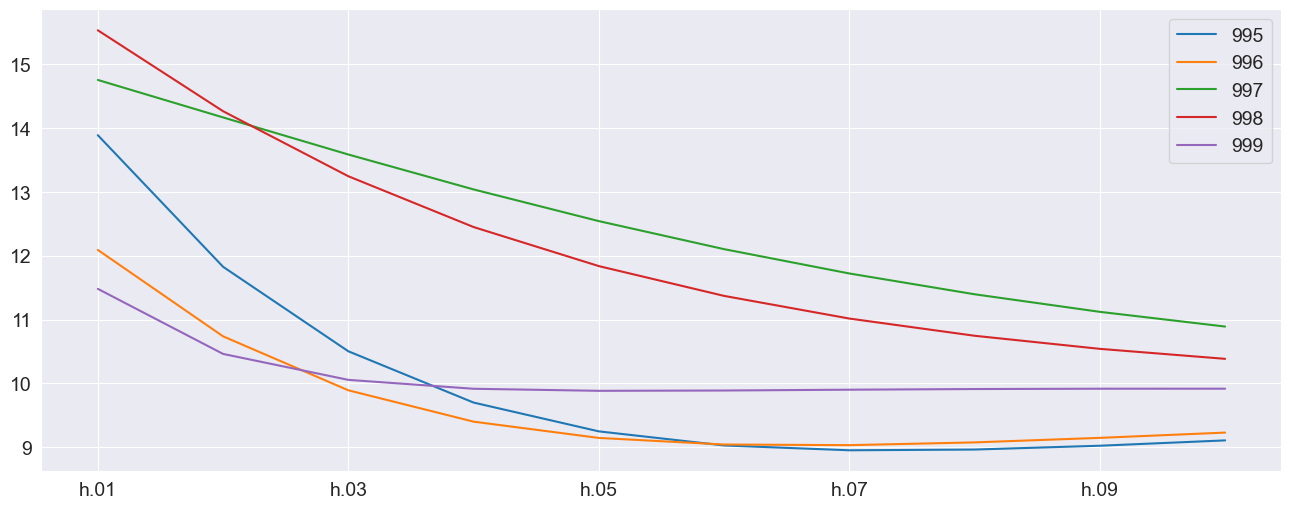

Producing multiple forecasts¶

forecast can produce multiple forecasts using the same fit model. Here the model is fit to the first 500 observations and then forecasting for the remaining values are produced. It must be the case that the x values passed for forecast have the same number of rows as the table of forecasts produced.

[8]:

res = mod.fit(disp="off", last_obs=500)

exog_fcast = {"x0": x0_oos[-500:], "x1": x1_oos[-500:]}

multi_forecasts = res.forecast(start=500, horizon=10, x=exog_fcast)

multi_forecasts.mean.tail(10)

[8]:

| h.01 | h.02 | h.03 | h.04 | h.05 | h.06 | h.07 | h.08 | h.09 | h.10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 990 | 19.143641 | 18.158693 | 17.392944 | 16.666003 | 15.937598 | 15.217332 | 14.526513 | 13.883786 | 13.301141 | 12.784047 |

| 991 | 19.550628 | 18.980455 | 18.256273 | 17.429481 | 16.563236 | 15.709863 | 14.905534 | 14.171625 | 13.518095 | 12.946926 |

| 992 | 16.743818 | 15.302423 | 14.116456 | 13.174990 | 12.442437 | 11.878835 | 11.447845 | 11.119198 | 10.868785 | 10.677876 |

| 993 | 12.804902 | 11.464925 | 10.580023 | 10.024513 | 9.698397 | 9.526739 | 9.455541 | 9.447025 | 9.475426 | 9.523615 |

| 994 | 10.290331 | 9.763452 | 9.585342 | 9.563151 | 9.604547 | 9.666523 | 9.730023 | 9.787416 | 9.836367 | 9.876858 |

| 995 | 4.924282 | 4.475312 | 4.768466 | 5.353045 | 6.012819 | 6.648904 | 7.220636 | 7.715694 | 8.135038 | 8.485353 |

| 996 | 3.673177 | 3.588021 | 3.936349 | 4.498184 | 5.142818 | 5.794415 | 6.411020 | 6.971547 | 7.467585 | 7.898231 |

| 997 | 1.271340 | 2.149341 | 3.234704 | 4.310152 | 5.285294 | 6.130811 | 6.845654 | 7.440838 | 7.931567 | 8.333534 |

| 998 | -2.857484 | -1.200377 | 0.689355 | 2.453864 | 3.970887 | 5.222239 | 6.231481 | 7.035302 | 7.671100 | 8.172198 |

| 999 | -1.535465 | -0.056001 | 1.504171 | 2.952380 | 4.219401 | 5.293260 | 6.186771 | 6.921866 | 7.522284 | 8.010361 |

The plot of the final 5 forecast paths shows the the mean reversion of the process.

[9]:

_ = multi_forecasts.mean.tail().T.plot()

The previous example made use of dictionaries where each of the values was a 500 (number of forecasts) by 10 (horizon) array. The alternative format can be used where x is a 3-d array with shape 2 (variables) by 500 (forecasts) by 10 (horizon).

[10]:

exog_fcast = np.array([x0_oos[-500:], x1_oos[-500:]])

print(exog_fcast.shape)

array_multi_forecasts = res.forecast(start=500, horizon=10, x=exog_fcast)

np.max(np.abs(array_multi_forecasts.mean - multi_forecasts.mean))

(2, 500, 10)

[10]:

np.float64(0.0)

x input array sizes¶

While the natural shape of the x data is the number of forecasts, it is also possible to pass an x that has the same shape as the y used to construct the model. The may simplify tracking the origin points of the forecast. Values are are not needed are ignored. In this example, the out-of-sample values are 2 by 1000 (original number of observations) by 10. Only the final 500 are used.

WARNING

Other sizes are not allowed. The size of the out-of-sample data must either match the original data size or the number of forecasts.

[11]:

exog_fcast = np.array([x0_oos, x1_oos])

print(exog_fcast.shape)

array_multi_forecasts = res.forecast(start=500, horizon=10, x=exog_fcast)

np.max(np.abs(array_multi_forecasts.mean - multi_forecasts.mean))

(2, 1000, 10)

[11]:

np.float64(0.0)

Special Cases with a single x variable¶

When a model consists of a single exogenous regressor, then x can be a 1-d or 2-d array (or Series or DataFrame).

[12]:

mod = arch_model(y, x=exog.iloc[:, :1], mean="ARX", lags=1)

res = mod.fit(disp="off")

print(res.summary())

AR-X - GARCH Model Results

==============================================================================

Dep. Variable: data R-squared: 0.934

Mean Model: AR-X Adj. R-squared: 0.934

Vol Model: GARCH Log-Likelihood: -2305.88

Distribution: Normal AIC: 4623.75

Method: Maximum Likelihood BIC: 4653.20

No. Observations: 999

Date: Mon, Nov 24 2025 Df Residuals: 996

Time: 04:21:17 Df Model: 3

Mean Model

========================================================================

coef std err t P>|t| 95.0% Conf. Int.

------------------------------------------------------------------------

Const -6.8670 0.274 -25.098 5.184e-139 [ -7.403, -6.331]

data[1] 0.7589 1.025e-02 74.032 0.000 [ 0.739, 0.779]

x0 1.8354 6.148e-02 29.856 7.416e-196 [ 1.715, 1.956]

Volatility Model

==========================================================================

coef std err t P>|t| 95.0% Conf. Int.

--------------------------------------------------------------------------

omega 5.0309 1.470 3.421 6.231e-04 [ 2.149, 7.913]

alpha[1] 0.1249 4.186e-02 2.984 2.845e-03 [4.287e-02, 0.207]

beta[1] 0.0360 0.245 0.147 0.883 [ -0.443, 0.515]

==========================================================================

Covariance estimator: robust

These two examples show that both formats can be used.

[13]:

forecast_1d = res.forecast(horizon=10, x=x0_oos[-1])

forecast_2d = res.forecast(horizon=10, x=x0_oos[-1:])

print(forecast_1d.mean - forecast_2d.mean)

## Simulation-forecasting

mod = arch_model(y, x=exog, mean="ARX", lags=1, power=1.0)

res = mod.fit(disp="off")

h.01 h.02 h.03 h.04 h.05 h.06 h.07 h.08 h.09 h.10

999 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Simulation¶

forecast supports simulating paths. When forecasting a model with exogenous variables, the same value is used to in all mean paths. If you wish to also simulate the paths of the x variables, these need to generated and then passed inside a loop.

Static out-of-sample x¶

This first example shows that variance of the paths when the same x values are used in the forecast. There is a sense the out-of-sample x are treated as deterministic.

[14]:

x = {"x0": x0_oos[-1], "x1": x1_oos[-1]}

sim_fixedx = res.forecast(horizon=10, x=x, method="simulation", simulations=100)

sim_fixedx.simulations.values.std(1)

[14]:

array([[0.95100158, 1.23536616, 1.23845518, 1.29787466, 1.37324683,

1.37927841, 1.52168268, 1.41965794, 1.26719512, 1.29530506]])

Simulating the out-of-sample x¶

This example simulates distinct paths for the two exogenous variables and then simulates a single path. This is then repeated 100 times. We see that variance is much higher when we account for variation in the x data.

[15]:

from numpy.random import RandomState

def sim_ar1(params: np.ndarray, initial: float, horizon: int, rng: RandomState):

out = np.zeros(horizon)

shocks = rng.standard_normal(horizon)

out[0] = params[0] + params[1] * initial + shocks[0]

for i in range(1, horizon):

out[i] = params[0] + params[1] * out[i - 1] + shocks[i]

return out

simulations = []

rng = RandomState(20210301)

for _ in range(100):

x0_sim = sim_ar1(np.array([1, 0.8]), x0.iloc[-1], 10, rng)

x1_sim = sim_ar1(np.array([2.5, 0.5]), x1.iloc[-1], 10, rng)

x = {"x0": x0_sim, "x1": x1_sim}

fcast = res.forecast(horizon=10, x=x, method="simulation", simulations=1)

simulations.append(fcast.simulations.values)

Finally the standard deviation is quite a bit larger. This is a most accurate value fo the long-run variance of the forecast residuals which should account for dynamics in the model and any exogenous regressors.

[16]:

joined = np.concatenate(simulations, 1)

joined.std(1)

[16]:

array([[3.07836131, 4.74261758, 6.27686851, 7.36299155, 8.42636761,

8.74761655, 8.88963723, 9.02413577, 9.15957464, 9.35624779]])

Conditional Mean Alignment vs. Forecast Alignment¶

When fitting a model with exogenous variables, the data are aligned so that the values in x[j] are used to compute the conditional mean of y[j]. For example, in the case of an AR(1)-X, the model is

We can recover the conditional mean by subtracting the residuals from the original data. When we do this we see that the conditional mean of observation 0 is missing since we need one lag of \(Y\) to fit the model.

[17]:

mod = arch_model(y, x=exog, mean="ARX", lags=1)

res = mod.fit(disp="off")

y - res.resid

[17]:

0 NaN

1 -2.824955

2 -0.446759

3 2.134558

4 10.855786

...

995 6.153649

996 4.629893

997 2.482360

998 -1.981092

999 -3.503051

Length: 1000, dtype: float64

Conditional Mean uses target alignment¶

When modeling the conditional mean in an AR-X, HAR-X, or LS model, the \(X\) data is target-aligned. This requires that when modeling the mean of y[t], the correct values of \(X\) must appear in x[t]. Mathematically, the \(X\) matrix used when estimating a model should have the structure (using the Python indexing convention of a T-element data set having indices 0, 1, …, T-1):

forecast uses origin alignment¶

Forecasting with \(X\) values aligns them differently. When producing a 1-step-ahead forecast for \(Y_{t+1}\) using information available at time \(t\), the \(X\) values used for this forecast must appear in for t. This is needed since when once wants to produce true out-of-sample forecasts (see below), it must be the case that the final row of x passed forecast must all occur after the final time stamp of the most recent \(Y\) value. Mathematically, the \(X\)

matrix used in forecasting should have the following structure (using Python indexing convention so that a \(T\) observation dataset will have indices 0, 1, …, T-1).

where \(|\mathcal{F}_{s}\) is the time-\(s\) information set.

If you use the same x value in the model when forecasting, you will see different values due to this alignment difference. Naively using the same x values ie equivalent to setting

In general this would not be correct when forecasting, and will always produce forecasts that differ from the conditional mean. In order to recover the conditional mean using the forecast function, it is necessary to shift the \(X\) values by -1, so that once shifted, the x values will have the relationship

Here we shift the \(X\) data by -1 so x[s] is treated as being in the information set for y[s-1]. Also, note that the final forecast is NaN. Conceptually this must be the case because the value of \(X\) at 999 should be ahead of 999 (i.e., at observation 1,000), and we do not have this value.

[18]:

exog_dict = {col: exog[[col]].shift(-1) for col in exog}

fcast = res.forecast(horizon=1, x=exog_dict, start=0)

fcast.mean

[18]:

| h.1 | |

|---|---|

| 0 | -2.824955 |

| 1 | -0.446759 |

| 2 | 2.134558 |

| 3 | 10.855786 |

| 4 | 11.641844 |

| ... | ... |

| 995 | 4.629893 |

| 996 | 2.482360 |

| 997 | -1.981092 |

| 998 | -3.503051 |

| 999 | NaN |

1000 rows × 1 columns

(Nearly) out-of-sample forecasts¶

These “in-sample” forecasts are not really forecasts at all but are just fitted values with a different alignment. If you want real (nearly) out-of-sample forecasts\(\dagger\), it is necessary to replace the actual values of \(X\) with their conditional expectation. This can be done by taking the fitted values from AR(1) models of the \(X\) variables.

\(\dagger\) These are not true out-of-sample since the parameters were estimated using data from that same range of indices where these forecasts target. True out-of-sample requires both using forecast \(X\) values and parameters estimated without the period being forecasted.

[19]:

res0 = ARX(exog["x0"], lags=1).fit()

res1 = ARX(exog["x1"], lags=1).fit()

forecast_x = pd.concat(

[res0.forecast(start=0).mean, res1.forecast(start=0).mean], axis=1

)

forecast_x.columns = ["x0f", "x1f"]

in_samp_forcast_exog = {"x0": forecast_x[["x0f"]], "x1": forecast_x[["x1f"]].shift(-1)}

fcast = res.forecast(horizon=1, x=in_samp_forcast_exog, start=0)

fcast.mean

[19]:

| h.1 | |

|---|---|

| 0 | -3.725907 |

| 1 | 0.849168 |

| 2 | 1.774699 |

| 3 | 4.802985 |

| 4 | 14.053397 |

| ... | ... |

| 995 | 6.242001 |

| 996 | 2.973822 |

| 997 | 0.152542 |

| 998 | -1.722523 |

| 999 | NaN |

1000 rows × 1 columns

True out-of-sample forecasts¶

In order to make a true out-of-sample prediction, we need the expected values of X from the end of the data we have. These can be constructed by forecasting the two \(X\) variables and then passing these values as x to forecast.

[20]:

mod = arch_model(y, x=exog, mean="ARX", lags=1)

res = mod.fit(disp="off")

actual_x_oos = {

"x0": res0.forecast(horizon=10).mean,

"x1": res1.forecast(horizon=10).mean,

}

fcasts = res.forecast(horizon=10, x=actual_x_oos)

fcasts.mean

[20]:

| h.01 | h.02 | h.03 | h.04 | h.05 | h.06 | h.07 | h.08 | h.09 | h.10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 999 | -1.485125 | 0.023716 | 1.584431 | 3.005646 | 4.223329 | 5.231824 | 6.049987 | 6.704902 | 7.22437 | 7.633725 |